4 minutes

Harness: Your Context

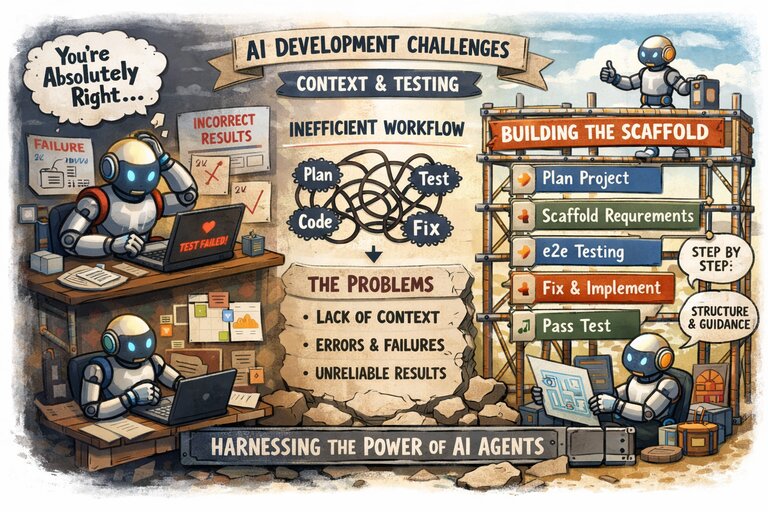

TLDR: If you want agents to perform better, building out the scaffolding for the agent is the most important task. I am creating a small series of blog posts about my findings. This post outlines the foundations and the problems that we are facing with AI.

Context, context, context! This is by far the number one thing that you need access to and that you need for your coding agents. I have been using Claude Code for the past 6 months, and I have to say that has been a wild journey.

I use Claude Code on my personal projects. I gave a presentation to my team about Claude Code and the following day we got our subscriptions to the service. I have built out a multi-agent workflow that generates Database Correction Forms. And then at the end, who has to review it? Me. I have built out many agents that perform tasks on tools that are utilized every day, and have weekly workshops with my team that span several hours, so that they can grasp the ideas and power behind the AI Agents.

All in all the thing that keeps coming up is the lack of the ability of the AI agents to get things “correct”. The dreaded notion of “You’re Absolutely Right!” has been lingering in the back of my mind for the last couple of months, and I needed a solution.

Unlike my work projects, my personal projects are primarily greenfield development where things are easy for the AI to change and adapt to, and the code base is not as large, but it is growing.

At work I have been thinking really hard about the challenges that I have with AI, and I found myself repeating the steps of the development. I would plan out a solution, code out the solution with supported unit tests, and then perform an e2e testing to ensure that the system works. During this e2e phase of the implementation, I found myself having the AI agent perform some of the implementation work again. I would have to stop in the middle of the e2e test and have the agent generate a plan and then fix the implementation that we worked on a few days ago. This got me thinking that I am doing something very inefficient.

Current Workflow

- plan out the project

- explore the code base

- write a work.md file

- implement the code

- implement the unit tests

- (repeat 1-5)

- start e2e test

- (repeat 1-6)

There is a better way to do it. I can start out with the plan, and the set of requirements, perform the e2e tests that do not work, but at least have the scaffolding of the test. Then at each failure in the e2e test, start work on the implementation. This way the implementation is keeping up with the work and progress that we are building towards in the e2e test.

A Slightly Better Workflow

- plan out the entire project

- write e2e test

- run e2e test (fails)

- explore the code base

- plan what needs to be implemented

- write a work.md file

- implement the code

- implement the unit tests

- pass e2e test

- (repeat 2-9)

But wait we can do better!

Over the last month I have been exploring more and reading up on what others are doing, most notably what Anthropic Engineers are doing. One such engineer at Anthropic, Jack Clark, has a blog. This latest article caught my attention. In it Jack mentions “The results show that AI systems, especially when given a software scaffold, can perform at the same level as security professionals”, and his conclusion “The messages keep being given from multiple domains, ranging from cybersecurity (here), to science, to math proving is that if you stick a modern LLM inside a scaffold (which basically serves as a proxy for a management structure and set of processes you might ask humans to follow), the AI system performs a lot better.”.

That word “scaffold” made me think really hard about the notion of tooling and design that guides the agent to a successful completion of the work that it needs to solve. I have been creating a workflow recently about what this “scaffold” is or this “harness” that I can place the AI agent in.

I plan to write out other blog posts about this “harness”, implement it, and see if it works as Jack mentions. Can I build out the scaffolding needed to make agents perform more effectively?

An Efficient Workflow (MVP)

- plan out the entire project

- scaffold the requirements for the project

- write e2e test

- run e2e test (fails)

- scaffold the requirements for the failing e2e step

- read the scaffold

- implement the code

- implement the unit tests

- pass e2e test

- (repeat 3-9)