4 minutes

Harness: The Ralph Wiggum

TLDR: it’s Spec Driven Development.

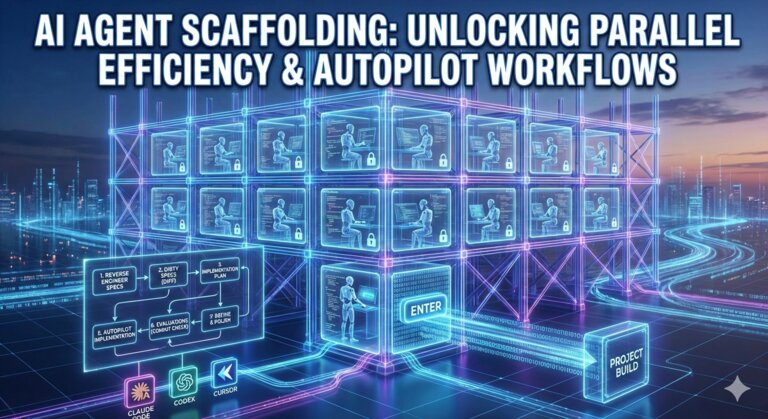

So a couple weeks ago in my first article, I mentioned something about scaffolding that Jack Clark over at Anthropic, one of the co-founders who has a blog called AI Import, mentioned this multiple times during his blog post. It’s basically the idea associated with harnesses. A harness is Claude Code, Codex, and Cursor.

These also have a scaffold around them built specifically for that type of tool, the environment that’s around the agent.

So, taking a look at what cloud code is and these other agentic coding agents, how can we expand the environment around the agent and how can we utilize the agent in a way to make it more efficient?

If you look at Anthropic’s recent blog post about creating the C compiler. They mentioned that they created a scaffold around the agents to organize up to 16 agents in parallel. This is what a scaffold provides with the power that you can harness with agents.

No, they didn’t use a swarm of agents, didn’t use a cluster of agents to perform the job. They were in parallel, in isolated environments, and they had a locking mechanism to make sure that they’re not duplicating work. This was 16 agents just working on their own thing. but the number of actual types of agents could have been more, the article does not mention this.

Currently at work, I am taking and approaching the steps to create a scaffold for the agents that we make at work. Our current implementation is built off of the Ralph Wiggum workflow. I use this one because, it just works. I also have tried out Get Shit Done too and I am still working with this one, so hopefully have a blog out about it.

In my previous blog, on brown field projects, I mentioned that I start by reverse engineering the specs based off the implementation of the current code base. I do this using a prompt provided here.

- Reverse Engineer the Specs

- Commit

- Talk to the agent and make the specs dirty.

- Commit for Diff of specs

- Create an implementation plan.

- Talk to the agent about implementation details

- Implement

- Evaluations

- Evaluate all Implementation Commits against commit in 2

- Refine and Polish, if needed.

After creating the specs, I need to plan out what we are going to do to change the specs. This is where I dig deep with the agent and talk to it. Talk to it like it knows everything about the system, This is usually where you can find gaps in the knowledge of the agent. And if there’s a gap, you probably need to create more specs. And provide that information to the agent as well.

I can talk with the agent for an hour, two hours, maybe even three, depending on how large and how specified I need my specifications to be. Sometimes it can easily be a copy paste from a comment I made when elaborating a story.

I then proceed to run this a couple of times, to ensure that the specs are complete. I will perform this for each topic that I need to fufil an implementation that I am working on.

I then proceed to generate the Implementation Plan, I will run this a couple of times too, depending on the size of the scope of work I am working on.

If I need special implementation details, I will provide this to the agent. My favorite MCP is Context7, if a context7 is not available, I will clone the repo in the workspace i am in and tell the agent to reference it.

Once we are finished with the planning, it’s complete autopilot from here, we implement. The implementation is usually what took the most amount of time before I started using agents in 2025. Coding and typing all the code, now it’s a press of the Enter button and the agent running off to the races.

But that is not all the happens, right? Thought I was talking about a scaffold at the begining of this?