6 minutes

Harness: Scaffolding

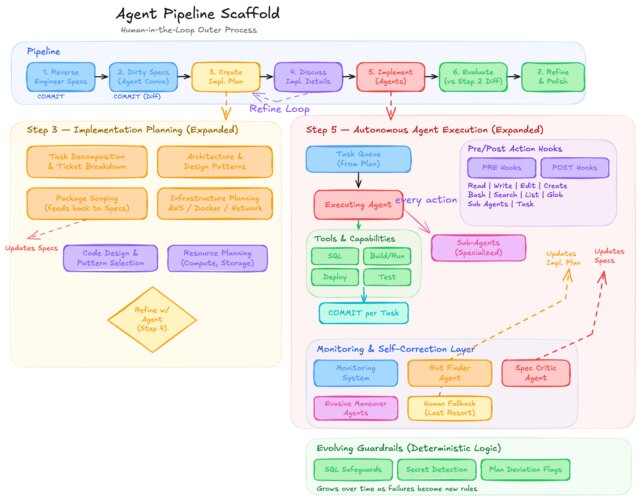

TLDR: Scaffolding is creating the determinist machine around the indeterministic to control them and get them to do what you need them to do. Agents are very interesting, they are capable of a lot, and you need to understand how they work. You need to understand how they think. To do this, I will talk to the agents, I will ask them how they approached a problem, and ask them to think about the problems that they faced. I will ask them to generate prompts for me from the discussions we have. Once we have that, I have an agent. That agent is now apart of my scaffold. The scaffold is a spawning engine that tells the decides what agent should spawn and perform work. Previous agents help the scaffold to run. Here is a link to the scaffold diagram on Excalidraw. If you have not used it, the Excalidraw MCP is amazing! thanks Anton!

In my previous article, that I released today, I mentioned how I used the Ralph Wiggum to get things done on autopilot. But I ended it on a cliff hanger. I really have agents that just do crazy things, man. And you’ve really got to help and watch them and understand why they do the things that they do. And sometimes these agents, they get stuck for whatever reason. And as the orchestrator, I have to understand why they got stuck. Why Couldn’t they just produce the code and implement the integration test to complete it? And I read their logs that they generate for me, and it’s filled with a lot of complicated stuff. Their struggle of the day, their one-hour period that they’re alive.

Agents are almost like humans, they think and they work, and they have determination beyond all measure. And if not told, they’ll try to even hack AWS.

So how do we keep them aligned? That’s pretty much the objective of scaffolding. How do we get them to work together? How can we get multiple of these agents to work at the same time? How can we get them to work efficiently for longer periods of time? If you look at the /insights recently, you might find a lot of details about where you stand as an agent orchrestator. Like as if Anthropic is handing out a hand to make us better or something, but reading it is seems like they are trying to get their agents to perform better. It’s real insightful.

Here is a Q&A with Claude on the discussion

Agent Pipeline Scaffold — Q&A

The Pipeline

We start off with Human in the Loop Processes:

- Reverse Engineer the Specs — Commit

- Talk to the agent and make the specs dirty — Commit for Diff of specs

- Create an implementation plan.

- Talk to the agent about implementation details

- Implement

- Evaluations — Evaluate all Implementation Commits against commit in 2

- Refine and Polish, if needed.

Step 3 — Implementation Planning

Q: When you say “implementation plan,” are you thinking task-level decomposition or more architectural?

Tasks/tickets, and architectural refining.

Q: Does the plan get committed as well?

Yes the plan gets committed, but we are not worried about that.

Q: In Step 4 you “talk to the agent about implementation details” — does that mean the plan from Step 3 is a draft that gets refined through conversation before implementation begins?

Yes it gets refined. There are a set of packages (software packages) that need to be scoped out and understood that get placed in both the specs and implementation plan. Code design and design patterns are important, as well as AWS resources and other resources like docker, computers, and networking.

Step 5 — Implementation

Q: Is the agent implementing against the plan task-by-task, or does it try to implement larger chunks at once?

Implement against the plan task-by-task, but sometimes the agent gets stuck. Sometimes the agent does something it should not. Sometimes the agent performs SQL DROP DATABASE. Sometimes the agent does not know what to do.

Q: Does each task/ticket result in its own commit?

Yes the agent will commit after each run.

Q: Does the agent have access to run the code, or is it purely writing code?

Yes the agent needs access to tools to build/run/deploy the code. It needs access, it needs ability to call SQL, it needs sub agents that have capabilities as well. It needs tools.

Q: Is there any feedback loop within Step 5 itself? Does the human review?

The human is not suppose to review the work at all, it should be up to all the agents, but sometimes (things do not go according to plan). I do not perform the evaluation in Step 6, Agents do the evaluation.

Q: When agents get stuck or hit something unexpected — does it loop back to the plan, or resolve inline?

When agents get stuck, this is a learning point to create scaffolding for the process. If there are no agents that can help in the process, then a human has to come in and provide help with the assistance of an agent.

Q: Are there any guardrails? What about hooks and monitoring?

There are hooks that do things for the agents. There is a monitoring system to monitor what the agents do. Pre and post execution hooks, that run on each action the agent performs. Read, List, Glob, Edit, Write, Create, Bash, Search, Sub Agents, Task.

Q: Does implementation include writing tests?

Testing is included in the implementation step. Because bugs are discovered during this process.

The Monitoring & Self-Correction Layer

Q: The DROP DATABASE scenario — is the monitoring preventative or reactive?

The monitoring system is there so that we can see it happens if it does, and understand why it did it, also helps us notice that it did it. It could be also like looking at secrets or something.

Q: When the agent “does something it should not” — is that a plan deviation or a dangerous action?

It can be both, and we need to understand why it happened, and create deterministic logic to prevent it from happening, and also other agents to see that it will happen and fix the problems in the implementation plan to remedy a solution.

Q: The “learning point to create scaffolding” — does that mean you’re literally updating the pipeline/hooks/guardrails for future runs?

Yep. Here is an example. So, for instance, I have agents that are implementing and they get stuck within the code base. They get stuck on integrations because the integrations are more like black box and they don’t have context about that integration. So I have a rut finder. The rut finder looks at the implementation plan. If it finds that the previous agents struggled at something multiple times, it will go in and do an exploratory testing and experiment with the tests that are associated with what the agents implementing were working on. During this exploratory testing, it will come back and update the implementation plan with what it found out so they can do a course correction. Another example could be an agent who critiques the specs because the specs no longer apply because of certain implementation details.

Q: The sub-agents — are these specialized or general-purpose?

Yeah they can be specialized or managerial or Evasive Maneuvers Specialized Agents.