3 minutes

Harness: Don’t Generate Specs as Code

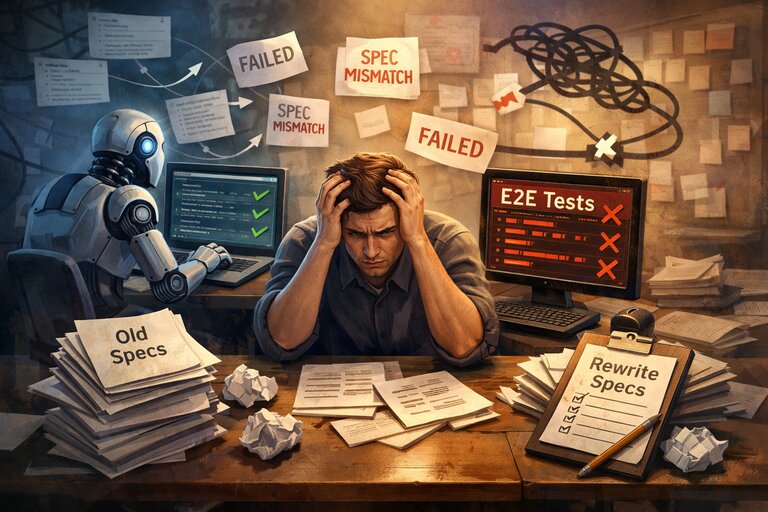

TLDR: Using agents to write code first to solidify specs with unit tests doubles the amount of work you have to perform when writing e2e tests because the behaviors don’t match the new specs. The best approach is to write out specs first, and keep you specs, and get alignment from your agents on review of your implementation.

Scaffolding, so what is it? It’s a way to get autonomous loops to generate prompts that fuel agents to build. The first step is curate the list of specs that you need. Here is an example of some specs I created for a project I worked on.

If you look at my previous article I talked about how I started building with the agents first instead of implementing the specs.

So I will start from that perspective. At first when I was building, I would write the specs with the agent and generate a plan to code. Then generate the code, and create a new plan and rinse and repeat. Thing is the plan is short lived.

Then you would do validation on those specs, so that you have a source of truth for what was implemented based off those specs. With no evaluation except that the code was written, and by you, most importantly. You proceed to ask the Agent to generate unit tests. Bingo, it writes tests that fit the generated code. This creates a paradigm where specs could be missing, incorrect, or anything else. After all I was never reviewing the implemented work with the agent.

At some time I would write into a working.md doc, and have the agent keep track there as well of the progress of the project. But this is similar to.

There is no written document to verify SPECIFICS of the code! But you planned the session with the Agent, right? It wrote out a long article of the plan in the .claude/plans folder, right? Yeah it did, so there is that. But that isn’t specs, that’s implementation details. Unless you have specs that were generated by a project manager, and were well defined in the scope of the work that you were building the project. There’s no way to validate the actual intent and detailed specifications that are needed for your application. Unless you do a lot of work.

So now you have unit tests, and you have the implementation of that supporting the unit tests. Basically the unit tests just validate the code you generated, really doing nothing there Jimbo.

Taking a step back from this, is not doing much, you might say but at least you have coded with the Agent your specs in the form of code, and have solidified them using unit tests. Good job there Copernicus, really went around the world with that one.

So you go through several iterations performing this throughout the entire project and then it comes time for E2E tests.

Now I need to use e2e tests to support the behavior of the product. Remember, you have already written out what the specs were, they are the 5k LOC unit tests that validate the code, and that was in the form of code supported by unit tests. Now we’re just supporting the behavior of the application. The plan files generated by claude code are long gone and forgotten until the end of time.

And when the behavior of the application fails, you have to rewrite the specs that you originally wrote so this is basically doubling the amount of work that you were performing.

This is a current problem with AI agents.

To prevent this from happening, we have to write out our specs. Here is an example of some specs that I wrote recently for a project I built this week.